One of the most pressing ethical concerns in AI development is the potential for bias in algorithms. Since AI systems learn from data, they can inherit biases present in the datasets they are trained on. This can result in unfair outcomes, such as biased hiring algorithms, discriminatory loan approvals, or skewed facial recognition systems. The impact of biased AI can reinforce existing societal inequalities, disproportionately affecting marginalized groups.

Addressing this issue requires a commitment to transparency and diversity in AI training data. Developers must strive to create models that are not only accurate but also fair, considering the broader social implications of their use. This might involve auditing algorithms for potential biases, incorporating diverse perspectives into AI research, and developing standards for ethical AI deployment.

Automation and job displacement

Navigating this dilemma involves finding a balance between leveraging data for innovation and respecting users’ rights to privacy. Concepts like data anonymization, transparency in data usage, and user consent play a critical role. Additionally, regulations such as the General Data Protection Regulation (GDPR) provide frameworks for data protection, but the responsibility also falls on developers to prioritize ethical data practices. Acknowledging and addressing these concerns ensures that the benefits of AI do not come at the expense of individual privacy.

Addressing this challenge requires a proactive approach to workforce retraining and reskilling. Governments, businesses, and educational institutions must collaborate to provide training opportunities that equip workers with the skills needed for a changing job market. Furthermore, a commitment to designing AI systems that augment human capabilities, rather than simply replacing them, can help ensure a future where technological progress benefits everyone, rather than creating new divisions.

Autonomous systems and the question of control

One of the most profound ethical dilemmas surrounding AI is the development of autonomous systems, such as self-driving cars, drones, and AI in military applications. When machines have the ability to make decisions without human intervention, it raises questions about control and the extent to which we should entrust decision-making to AI. This is particularly sensitive when it comes to life-and-death decisions, such as in healthcare or autonomous weapons systems.

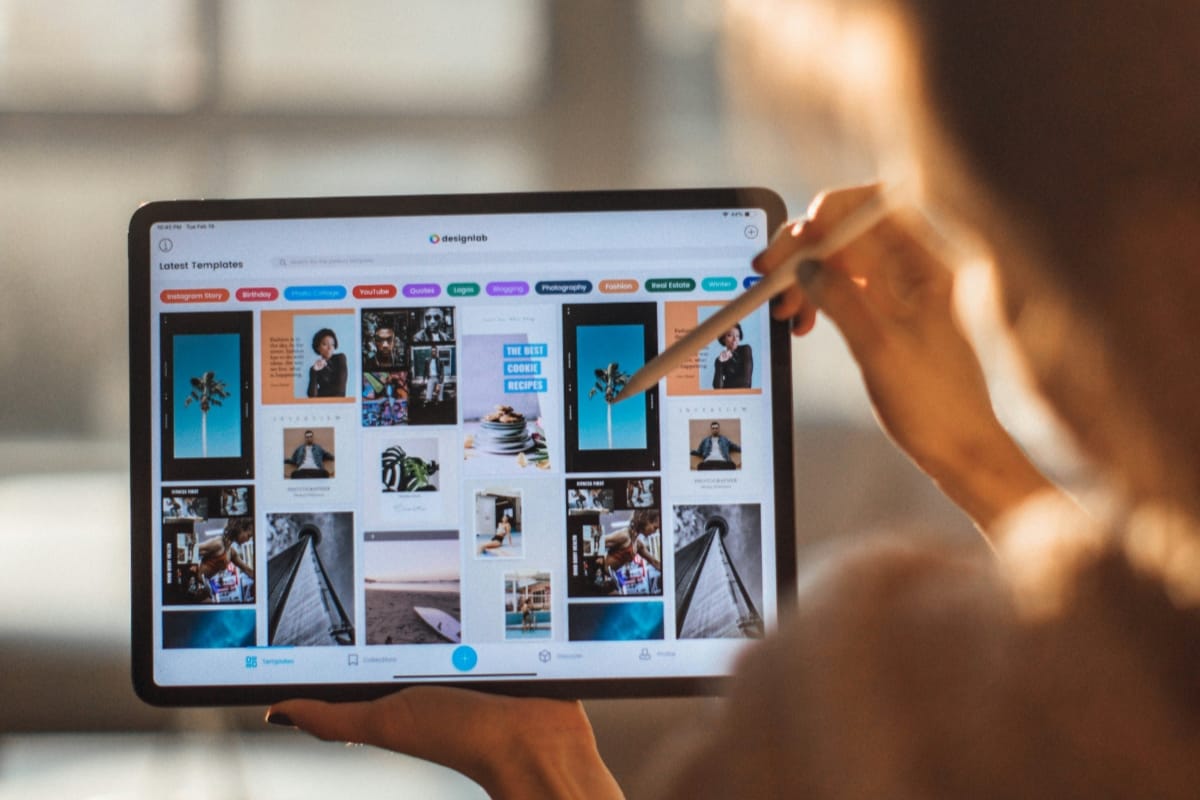

My workspace

My workspace

Developers must carefully consider the ethical boundaries of AI autonomy. This means ensuring that humans remain in the loop for critical decisions and establishing clear limits on the use of AI in scenarios where human judgment is irreplaceable. The goal should be to use AI as a tool that extends human capabilities, not as a replacement for human moral judgment.

The role of AI in shaping societal values

AI systems are not just technical tools; they also reflect and shape societal values. The way AI is developed and deployed can influence what is considered acceptable or desirable in society. For instance, AI systems that prioritize efficiency over compassion in healthcare or profit over fairness in social services can shape societal norms in troubling ways.

The balance between innovation and responsibility is the key to a future where AI truly serves humanity.

This places a significant responsibility on AI developers and policymakers to ensure that AI technologies align with ethical values that promote the well-being of individuals and communities. This may involve embedding ethical principles into AI design, engaging in public dialogue about the role of AI in society, and ensuring that AI development is inclusive and considers the needs of all stakeholders.

Start the conversation